In today’s generative AI landscape, raw “model capabilities” are rarely the hardest part. The greater challenge comes when turning an idea into a product that people use more than once, that produces steady results, and that can run over time without failure. For most developers, the real pressure points sit in workflow orchestration, engineering reliability, and billing design.

This article draws on the experience of an independent developer to explain the journey from “calling a model” to “delivering a product”. It outlines how to connect multiple models into a working production pipeline and how to turn variable inference costs into billing rules that users can understand and manage.

1. From “Model Demo” to “Product Delivery”: The System Matters More Than the Model

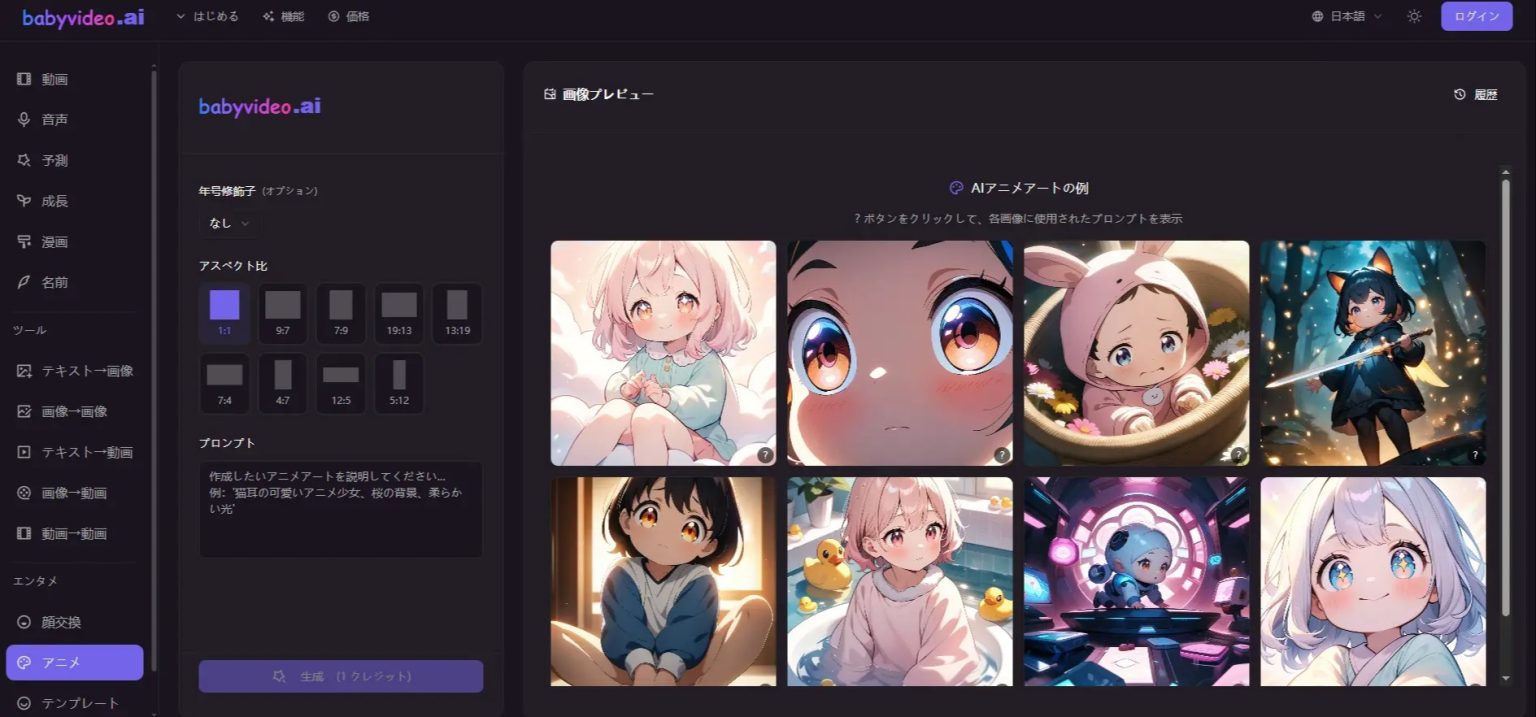

Many AI products begin in a similar way. A developer selects a capable model, builds a simple interface for text or image input, and returns an output. This approach works well for early demos.

Once real users arrive, problems surface quickly.

- Input quality varies widely across devices, lighting, framing, and image resolution, which leads to uneven results.

- Requests fail or time out due to network issues, queue delays, model load, or supplier outages.

- Repeated runs with the same input can produce different outputs, which weakens user trust.

- Inference costs fluctuate when requests queue, retry, or take longer than expected.

- Billing becomes hard to explain, whether charges are per request, per second, or per output, and users struggle to estimate costs in advance.

At this point, the challenge is no longer about a single “model”, but about managing a full end-to-end system.

2. Multi-Model Concatenation: Breaking the Workflow into Replaceable Parts

A stable product workflow avoids pushing all tasks through one model. A more reliable approach is to divide the process into clear modules that can be changed without breaking the whole system.

- Input understanding and structure, where user intent is turned into clear parameters such as style, subject, or duration.

- Preprocessing, which handles noise reduction, cropping, and size alignment.

- Subject separation, which isolates the subject from the background to improve consistency.

- Subject generation or stylisation, which applies style changes without losing identity features.

- Video or motion generation, which turns static content into controlled motion.

- Post-processing, which can include interpolation, flicker control, compression, or watermarking.

- Delivery and storage, which manages files, links, and reuse.

This structure allows each stage to be improved on its own. If a supplier or model changes, only one module needs updating. It also makes cost tracking and billing mapping clearer.

3. Common Engineering Problems That Break AI Products

3.1 Queues and concurrency must support stability, not just execution.

As usage grows, queues and concurrency limits often fail first. Systems need defined limits per model or supplier, clear timeout rules, retry logic, and task priorities. A failure handling plan must cover refunds or retries.

Treating each generation task as a state machine, moving from queued to running to success or failure, makes issues traceable and recoverable.

3.2 Input control defines output quality.

If input quality is poor, output control is impossible. Many user images suffer from low resolution, poor framing, or blocked faces.

A product must screen inputs and guide users when content does not meet requirements. It must also apply consistent preprocessing to size, alignment, and subject scale. This step improves success rates and builds trust.

3.3 Consistency matters more than surprise.

Generative systems include randomness, but products must limit it. Fixed seeds, preset templates, and defined quality tiers help users understand outcomes. Offering clear options such as stable, fast, or low-cost modes makes behaviour predictable.

Consistency often leads to better retention than rare standout results.

3.4 Observability keeps costs under control.

Without visibility, optimisation is guesswork. Systems should track queue time, inference time, failure causes, and per-task cost estimates. Supplier reliability also needs monitoring. This data shapes pricing and system improvements.

3.5 Storage and delivery need structure and limits.

Outputs must be easy to access but hard to abuse. Good practice includes structured storage paths, time-limited links, separation of public and internal files, and automated cleanup policies.

4. Turning Unstable Inference Costs into Predictable Prices

Pricing must meet three needs at once. Users must understand it. Costs must stay under control. Edge cases must not break the system.

Two billing approaches appear most often.

4.1 Billing by result.

Charging per image, video, or template is easy to understand. Internally, costs need averaging and risk buffering. This model works well for fixed-length or templated outputs. Different complexity tiers prevent cost mixing.

4.2 Billing by resources.

Charging by time or compute matches cost more closely but confuses users. It often needs cost estimates and caps. Many developers use a hybrid approach: they price by result for users while calculating by resources internally.

4.3 Protection mechanisms are essential.

Cost caps prevent extreme cases from draining budgets. Clear failure rules define when refunds, partial credits, or retry tokens apply. Without these, support demand grows fast.

5. Why System Design Becomes the Real Competitive Edge

Many assume the moat lies in using a stronger model. In practice, long-term advantage comes from how systems handle inputs, retries, queues, and failures. It comes from how templates limit uncertainty, how costs are understood, and how user journeys stay simple.

Models, suppliers, and prices will change. What lasts is the system that turns “uncontrollable generation” into a “deliverable service”.